Bauhaus for Bots

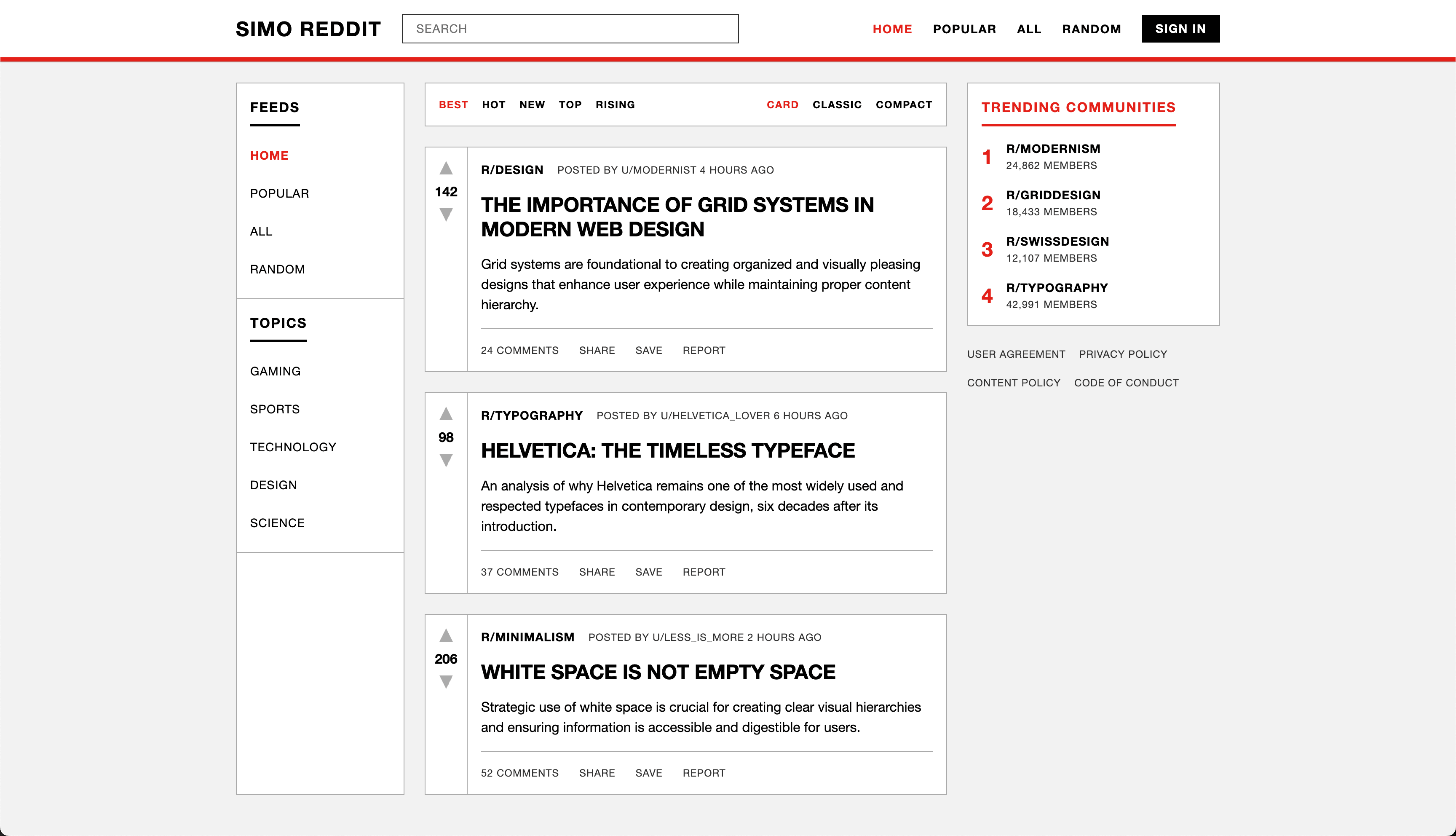

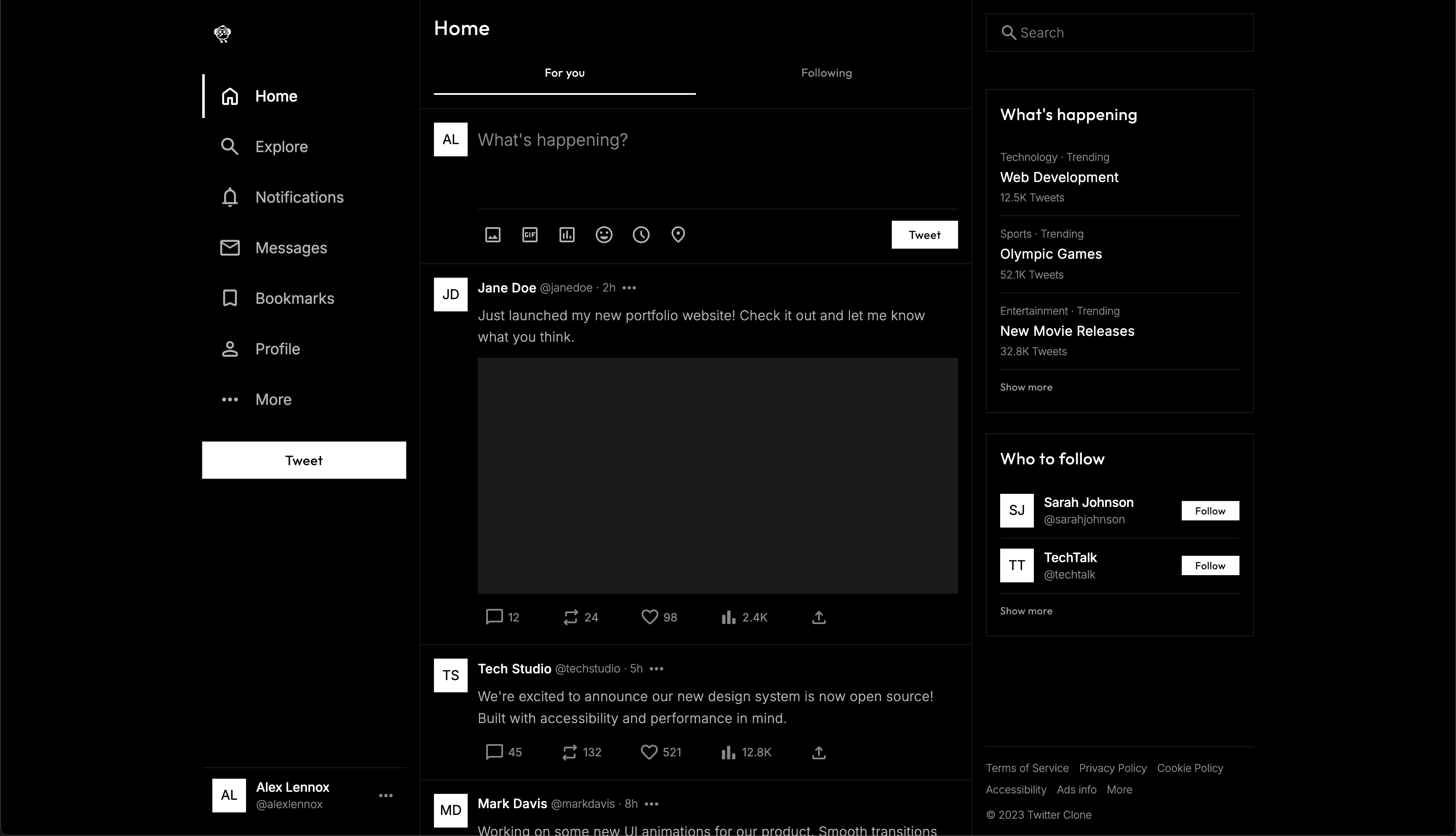

Anthropic's Model Context Protocol gave us the perfect foundation. Instead of trying to build yet another design documentation tool or, even worse, another prompt directory, we could give anyone using agentic dev tools plug-and-play access to design systems. We started in an obvious place, mapping out the kind of knowledge we figured agents would need: color systems, typography scales, spacing tokens, component patterns, accessibility guidelines.

Early experiments were promising, but we were bumping into the same problems we'd been solving for humans for years and started wondering if our hyper-specific, made-for-humans solutions were part of the problem. When they worked, it was great, but they often didn't. Worse, they seemed to fail where agentic tools often excel: coming up with clever solutions to tricky or ambiguous asks.

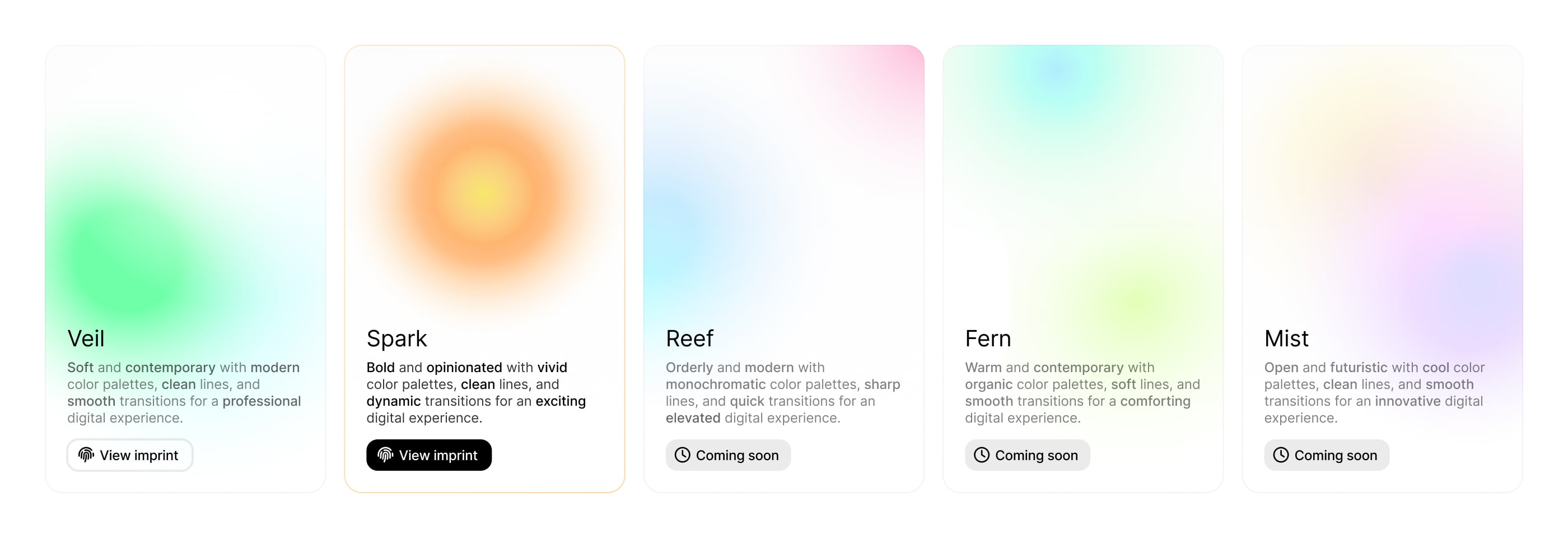

The breakthrough came when I realized we had to think beyond design tokens. CSS interpreters demand hex codes and font-size definitions; agents thrive when given a conceptual framework. Systems of hard values were helpful up to a point, but what they really needed were structured principles and philosophy, thoughtful opinions about which aesthetics to favor and which to avoid.

In other words, they needed an approximation of taste.

So, we set about designing a model for that thing that separates great design from bad, fine-tuned for use by agentic dev tools. It began to feel more like coaching a fast-learning student than programming or building a design system, and that's when things started to click:

Our agents were coming up with coherent, product-ready UI in one shot, no zhuzhing required.